Like fractals, complex system can be self-similar.

Fractal

From Wikipedia, the free encyclopedia

For the 2009 recording by Skyfire, see Fractal (EP).

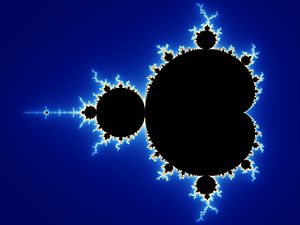

The Mandelbrot set is a famous example of a fractal

A fractal is generally "a rough or fragmented geometric shape that can be split into parts, each of which is (at least approximately) a reduced-size copy of the whole,"[1] a property called self-similarity. Roots of mathematical interest on fractals can be traced back to the late 19th Century; however, the term "fractal" was coined by Benoît Mandelbrot in 1975 and was derived from the Latin fractus meaning "broken" or "fractured." A mathematical fractal is based on an equation that undergoes iteration, a form of feedback based on recursion.[2]

A fractal often has the following features:[3]

- It has a fine structure at arbitrarily small scales.

- It is too irregular to be easily described in traditional Euclidean geometric language.

- It is self-similar (at least approximately or stochastically).

- It has a Hausdorff dimension which is greater than its topological dimension (although this requirement is not met by space-filling curves such as the Hilbert curve).[4]

- It has a simple and recursive definition.

Because they appear similar at all levels of magnification, fractals are often considered to be infinitely complex (in informal terms). Natural objects that approximate fractals to a degree include clouds, mountain ranges, lightning bolts, coastlines, snow flakes, various vegetables (cauliflower and broccoli), and animal coloration patterns. However, not all self-similar objects are fractals—for example, the real line (a straight Euclidean line) is formally self-similar but fails to have other fractal characteristics; for instance, it is regular enough to be described in Euclidean terms.

Gigaom has an article about the complexity of a smart grid electric vehicle system.

Report: IT and Networking Issues for the Electric Vehicle Market

John Gartner

Clint Wheelock

- Monday, September 21, 2009

- Join the Discussion

Summary:

This Pike Research report focuses on the IT and networking requirements associated with technology support systems for the emerging Electric Vehicle (EV) market. Key areas covered include vehicle connection and identification, energy transfer and vehicle-to-grid systems, communications platforms, pricing and billing systems and implementation issues.

The new generation of mass-produced EVs (including both plug-in and all-electrics) that will start arriving in 2010 will be able to charge at the owner’s residence, place of business, or any number of public and private charging stations. Keeping track of the ability of these vehicles and the grid to transfer energy will require transmitting data over old and new communications pathways using a series of developing and yet-to-be-written standards.

Industries that previously had little to no interaction with each other are now collaborating, determining new technologies and standard protocols and formats for sharing data. Formerly isolated networks must be able to handshake and seamlessly share volumes of financial and performance data. EV charging transactions will, for the first time, bring together platforms including vehicle operating systems and power management systems, utility billing systems, grid performance data, charging equipment applications, fixed and wireless communications networks, and web services.

When you look at the complexity of the system, it looks amazingly like the issues to put in a real-time energy monitoring system in the data center.

- Executive Summary

- Vehicle Connection and Identification

- Building Codes

- Battery Status

- Managing Vehicle-Grid Interaction

- Power Transfer

- Timed Power Transfer

- Communications Between Charging Locations and the Grid

Home Area Networks

Smart Meters- Communications Channels

- Broadband

- ZigBee

- Powerline Networking

- Cellular Networks

- Utility Interaction with Customers

- Real-time Energy Pricing

- Enabling Vehicles to Respond to Grid Conditions

- Renewable Energy

- Future Vehicle to Grid (V2G) Applications

- Implementation Issues

- Cost

- Standards in Flux

- Clash of Multiple Industries

- Control

- Privacy